IntroVAE

Improving quality of generated images through an introspective manner. Combines the high quality generations of GANs while maintaining a latent representation of the images. Please see below for my analysis!

About this Project

This project implements the IntroVAE.

Please see my project on implementing a VAE for more on VAE latent space analysis.

Model checkpoints, parameters files and run files are saved in the predictions folder. See Analysis section below for more on each run.

IntroVAE background

IntroVAE is influenced by two parts, a VAE for it’s stable latent space, and a GAN for it’s high quality image generations. Here, a second objective is added to the VAE, which is for making high quality images. IntroVAE uses the mean squared error loss as a skeleton structure for the GAN, preventing problems like mode collapse. The reconstruction loss term is used as a prior to the model, and the GAN portion will be used to reconstruct better images.

Resources

Check out:

- VAES implementation and VAES Paper

- EBGANS is the gan architecture used.

- IntroVAE original paper for more information on the model architecture.

From VAE to IntroVAE

The architecture of the VAE remains unchanged, there are not additional neurons used. The difference, is where the encoder and decoder are switched along the model pipeline. This is to facilitate the use of an EBGAN. The encoder becomes the discriminator, and the decoder becomes the generator. The latent vector doubles as a energy representation vector when the encoder is being used as a discriminator, and a latent vector during the regular VAE pass through.

Theory

Analysis:

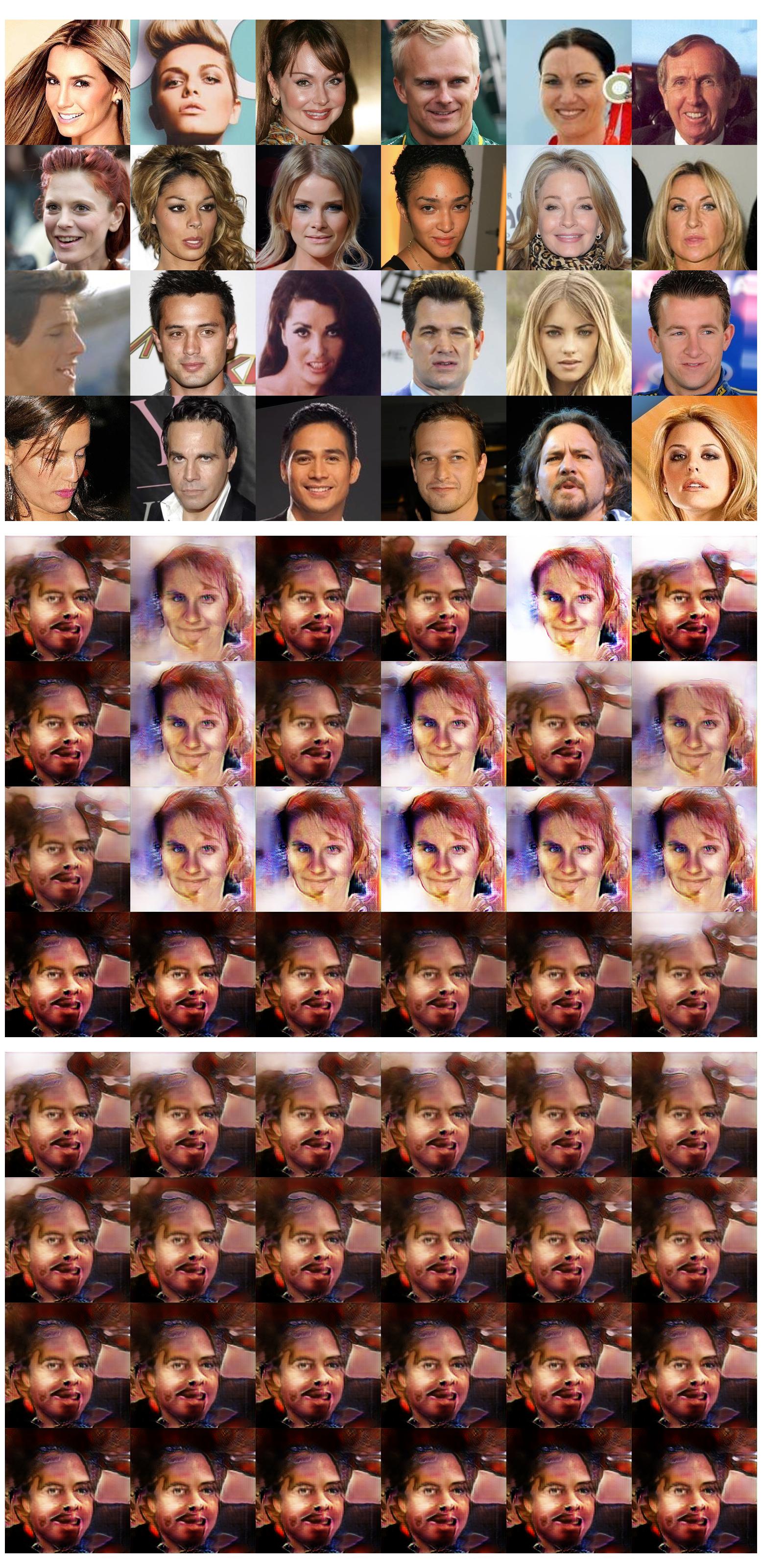

I found that using different parameters than the paper works better for me. Tuning the three main latent hyperparameters will have a large effect on the type of images that are generated. \alpha controls the amount of GAN, if this is 0, the model reduces down to a regular VAE. m is used to separate between high and low regions of energy. \beta is used as a term to control the weight of the reconstruction loss. \beta is used to ground the images, too much, and your images will look unrealistic, too little, and the GAN collapses into a weird images which contain certain aspects of detail, such as skin tecture, and hair strands, but fails to maintain the structured look of a realistic face, there is also a chance of posterior collapse. See below:

higher beta is better for this, accordingly, m should be tuned to be higher. This is because higher beta causes higher regularization loss, and the bound m should be enough to accomodate the higher ends of the regularization loss. However, this doesn’t provide a good latent traversal, so perhaps increasing the beta value would help.

After some tests, we see that higher beta does help. It seems that the beta is giving the generated faces more structure. Whereas alpha is providing more details, such as the strands of hair and skin textures.

I’ll stop this for now due to low computational resources. Generating these high resolution images is quite computationally heavy. Analysis of the effects of the hyperparameters is done, and would just need some more tuning.